Nissan to display trippy Invisible-to-Visible technology at CES

Nissan plans to use its space at CES next week to display what it's calling Invisible-to-Visible connected-car technology via an interactive, three-dimensional immersion experience that merges virtual reality and avatars of real people with more hum-drum real-world data.

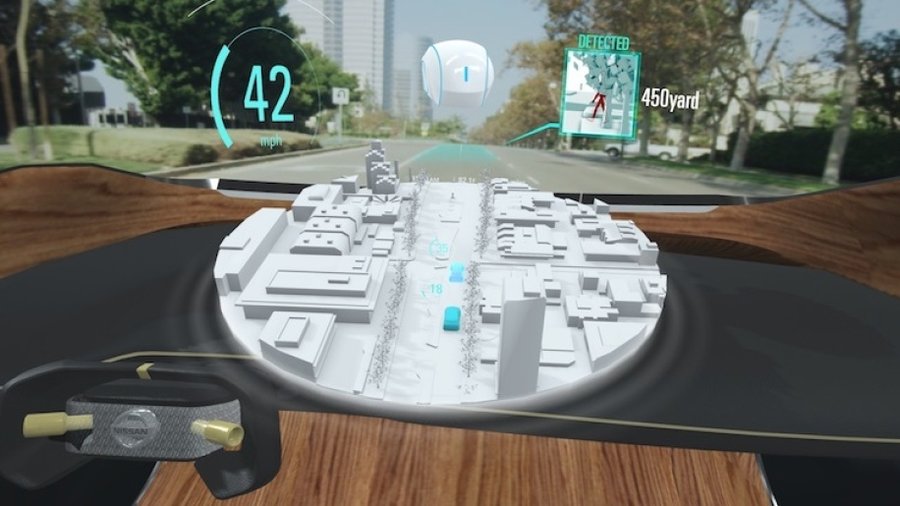

Dubbed I2V for short, the technology will combine information amassed from sensors outside and inside the vehicle with data from the cloud. It'll be able to track the vehicle's immediate surroundings, but also anticipate what's not visible to the driver, such as approaching vehicles or pedestrians that are concealed behind buildings or around the corner, and overlay those images on the driver's field of view. It sounds similar to what Honda has been doing developing "smart intersection" vehicle-to-everything technology at a pilot project in Marysville, Ohio. Only Nissan goes further by offering "human-like" interactive guidance to the driver, including through avatars that appear inside the car.

The technology gets a bit complicated, as the second photo in the slideshow above attests, and it's definitely far-out. It's powered by Nissan's Omni-Sensing technology, which includes the more familiar ProPilot semi-autonomous driving system and other systems that gather real-time data from the immediate traffic environment and inside the vehicle. It maps a 360-degree virtual space around the vehicle, and it can monitor people inside the car to anticipate when they might need help with wayfinding or could use a caffeine break to stay awake. Perhaps most interestingly, it connects driver and passengers to people in the virtual "metaverse," which Nissan says makes it possible for family, friends or others to appear inside the car as 3D, augmented reality avatars to provide company or assistance.

Just imagine the scenery, Nissan suggests, of a sunny day projected inside the vehicle when it's gray and rainy outside the car. Or a knowledgeable local guide (read: an Instagram influencer!) who can help introduce you to a new, unfamiliar locale or attraction. (Or a hologram of your mother riding shotgun, barking out directions and reminders to call your ailing father while you struggle to navigate rush-hour traffic.) Information provided by the virtual guide can be collected and stored either in the cloud for later use by others, or in the onboard artificial-intelligence system to provide a more efficient trip through local areas of interest.

You can also look for a professional driver from the metaverse to get personal instructions via a virtual chase car that appears in front of you to demonstrate the best driving techniques for the traffic or weather conditions. The system can even scan for available parking spaces and perform automated parking for you.

"The interactive features create an experience that's tailored to your interests and driving style so that anyone can enjoy using it in their own way," Tetsuro Ueda, a leader at the Nissan Research Center, said in a statement.

It's part of Nissan Intelligent Mobility, the automaker's vision for the future of how cars are powered, driven and integrated into society, and we look forward to seeing how it all works when CES opens Jan. 8. Nissan is also rumored to be planning to bring along the much-anticipated longer-range Leaf EV, which was a late scratch from the L.A. Auto Show in November due to the fallout from the Carlos Ghosn saga.

Related News