A New System Lets Self-Driving Cars 'Learn' Streets on the Fly

From the release:

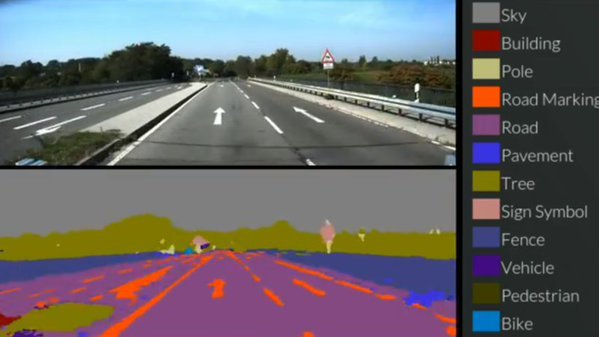

The first system, called SegNet, can take an image of a street scene it hasn't seen before and classify it, sorting objects into 12 different categories — such as roads, street signs, pedestrians, buildings and cyclists – in real time. It can deal with light, shadow and night-time environments, and currently labels more than 90% of pixels correctly. Previous systems using expensive laser or radar based sensors have not been able to reach this level of accuracy while operating in real time.

The second part, interestingly, allows a vehicle to orient itself no matter what position it is. This means it can "look" at an image and asses its "location and orientation within a few metres and a few degrees." This means the system is far better than GPS and requires no wireless connection to analyze and report a position.

You can try SegNet now by sending it down a random road in your town. The system will analyze random images of roads and tell you what it sees.

The benefit of this sort of system is that it eschews GPS entirely and instead focuses on machine learning in 3D space. It's not quite perfect yet.

"In the short term, we're more likely to see this sort of system on a domestic robot – such as a robotic vacuum cleaner, for instance," said research leader Professor Roberto Cipolla. "It will take time before drivers can fully trust an autonomous car, but the more effective and accurate we can make these technologies, the closer we are to the widespread adoption of driverless cars and other types of autonomous robotics."

Nouvelles connexes